The U.S. Federal Election Commission (FEC) has come under scrutiny for its recent refusal to ban or regulate political deepfakes, leading to criticism from democracy advocacy groups and concerns about the potential impact on democratic processes. Advocates have condemned the FEC's decision and called for immediate action from Congress and states to address the issue before the 2024 election. They argue regulatory action from the US government is seemingly not treating this issue with as much urgency as it requires. So what is the reality? Is AI really a major threat to our democracy? Here is a summary of the implications of unregulated deepfakes in political campaigns.

The Potential Threat of Deepfakes in Elections

“Deepfakes are a fundamental threat to democracy and to any civilisation that relies on the truth… Deepfakes could very well undermine our sense of reality”

Matthew Ferraro @MatthewFFerraro, attorney, WilmerHale

Deepfakes are manipulated audio, images, or videos that use AI technology to create realistic yet false representations. Most people on social media will have seen this fake Obama Video that was circulating via BuzzFeed five years ago when the technology was first emerging. Half a decade on, and the technology discussed is well and truly here. The cost of producing such videos at a high quality is now far cheaper thanks to recent advances in AI. However, the laws governing use of this technology have not caught up.

In the context of political campaigns, deepfakes can be used to spread misinformation, distort candidates' statements, and manipulate public opinion. The concern is that without regulation, the proliferation of deepfakes could undermine trust in the authenticity of information and disrupt the democratic process. Public Citizen argues that the existing federal law prohibiting fraudulent misrepresentation should apply to AI-generated deepfakes in campaign ads.

As Weissman has previously said:

“Generative A.I. now poses a significant threat to truth and democracy as we know it… The technology threatens election-altering hoaxes, on the one hand, and a destruction of voters’ ability to believe even truthful images and information, on the other.”

Our previous article on this issue gives more detail on the risks involved with AI on the campaign trail.

Manipulative AI-Generated Campaign Ads

“Is the real fear Skynet from 'Terminator,' or is it a fake presidential candidate who says something so outrageous that it can't be put back in the box"

- Jim Cramer, News anchor

The issue of manipulative AI-generated campaign ads is not theoretical. Republican presidential candidate Ron DeSantis released a video featuring AI-generated fake images of himself and former President Donald Trump, which fueled controversy. Public Citizen called on DeSantis' campaign to pledge not to use deepfake technology and remove the deceptive video. The incident highlights the potential impact of deepfakes on public perception of reality and the case for effective regulation in this area.

The FEC's Controversial Decision

President of the advocacy group Public Citizen, Robert Weissman, has expressed disappointment with the FEC's refusal last week to use its existing authority to address the use of deceptive deepfakes in elections. He labeled it a "shocking failure" on the part of the agency and emphasized the urgent need for Congress and states to intervene. Public Citizen had petitioned the FEC to ban or restrict intentionally misleading campaign ads generated through artificial intelligence. However, the FEC declined to open a full rulemaking process or even publish the petition for public input, leading to criticism from advocates.

Are There Technical Solutions?

Like laws and policy, technical solutions for distinguishing AI-generated content from human-made content are also struggling to keep up. While software exists that can detect AI output and AI tools can watermark their produced images or text, these responses have limitations. There is no universal standard for identifying real or fake content, and detectors must be continuously updated as AI technology advances. Additionally, open-source AI models may not include watermarks. ‘Content provenance’, which aims to identify the origin of digital media, is one approach, but comprehensive solutions are still needed to easily differentiate between human and AI-generated content.

Multi-pronged Approach Needed?

"We should be looking at the various ways of mitigating these risks that we already have and thinking about how to adapt them to AI"

Arvind Narayanan, Princeton University Computer Science Professor

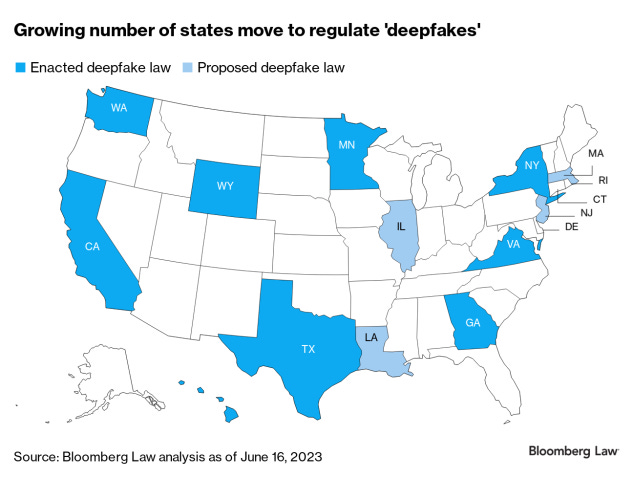

The challenge of distinguishing AI-generated content from human-made content requires a combination of approaches. Laws and regulations can play a role, particularly in high-risk areas such as nonconsensual deepfake pornography or deepfakes targeting election candidates or workers. Some states already have laws banning certain types of deepfakes, and copyright law can be utilized in specific cases. The Biden administration and Congress have shown intentions to address the issue through regulation, while the European Union is leading the way with the upcoming AI Act almost complete.

However, media literacy and critical thinking skills are also crucial for individuals to develop in order to identify and verify content, employing fact-checking and skepticism to mitigate risks and navigate the impact of AI-generated content on their lives and democratic processes. Better media literacy education would be a highly beneficial approach in concert with any regulatory approaches.

The Urgent Need for Action

“Pretty soon we won’t be able to tell the difference between what is real and what is fake. When these deepfakes take the form of campaign ads, the integrity of our elections is in deep trouble.”

Video by Lisa Gilbert, Executive VP at Public Citizen @Lisa_PubCitizen

All this emphasizes that the increasing sophistication of generative AI and deepfake technology poses a significant threat to democracy and truth. Many are concerned that a deepfake video released shortly before the 2024 election could go viral, leaving voters unable to distinguish between real and fake content. To prevent such abuses, there is a need for both political parties, presidential candidates, and the FEC to take action and create certainty on this issue. This may include actions like disavowing and ban the use of AI in political campaigns, or some lesser regulation. Regardless, of the type of action, most can agree that some immediate action is necessary to provide clarity and protect the integrity of elections and the functioning of democracy.

Conclusion

The unregulated use of AI-generated deepfakes in political campaigns raises grave concerns about the spread of misinformation, distortion of candidates' statements, and erosion of public trust. Many in politics, media and academia believe urgent action from Congress and states is needed to regulate and provide certainty around use of deceptive deepfakes to safeguard the democratic process. Without robust regulation and better media literacy education, many believe the threat of deepfakes looms large, compromising the integrity of next year’s election and the ability of voters to make informed decisions.

What do you think?

Is AI a Threat to Democracy?

Have Your Say, Secure Your Future

The Voices of America Scholarship is now inviting submissions on 10 prompts. Just answer 3 prompts to have a chance of winning a share of the scholarship funds. See our social media channels below or this blog for more information on the questions and how to win.

(Remember to quote the question at the start so people know the context by saying, "this is my BattlePACs submission for the prompt of …")

Social Platforms Accepted: Instagram or TikTok

Hashtags: #BattlePACs #BattlePACsScholarship

Tag: IG - @battlepacsofficial or TikTok - @battlepacs

Follow: BattlePACs socials below